Slipping through the net: Exploring online support for proscribed groups in Northern Ireland

19 April 2023

By: Ciarán O’Connor and Jacob Davey

__________________________________________________________________________

Executive Summary

On March 28 the UK Government raised the threat level for Northern-Ireland related terrorism from substantial to severe, meaning that an attack is considered to be highly likely. This followed the shooting of Chief Inspector John Caldwell in Omagh, Tyrone, on February 22, an attack for which the New IRA claimed responsibility in a statement days later. The same group attempted to kill two police officers with a bomb in Strabane last November. The atmosphere has been made only more febrile by political tensions in Northern Ireland surrounding Brexit trade agreements. The increase also came just before the 25th anniversary of the Good Friday Agreement, and a much-anticipated visit to Ireland by President Joe Biden.

Analysts and policymakers have paid significant attention to the online activity of Islamist and extreme right-wing communities in recent years. This focus has not been extended to the digital footprint of the threat which characterised the UK and Irish terrorism landscape for much of the second half of the 20th century. This is understandable with the Good Friday agreement signed long before social media’s permeation of everyday life. However, the increasingly febrile environment surrounding Northern Ireland Related Terrorism (NIRT) makes it necessary to understand the extent to which social media is now playing a role in exacerbating tensions, as well as incubating the movements and organisations driving the threat.

There is evidence documenting platforms’ efforts to block users from promoting such groups. A 2021 leak of Facebook’s list of “dangerous individuals and organisations” that are blacklisted on the platform included eight entities related to Northern Ireland: four factions operating under names associated with the IRA and four loyalist paramilitary groups. However, due to a lack of attention, small online communities supportive of terrorism in Northern Ireland have been able to grow unnoticed on numerous platforms.

To help shine a spotlight on this phenomenon ISD engaged in scoping research on three leading platforms, Facebook, Instagram and TikTok, to better understand permissive online environments that contribute to the glorification of violence and proscribed groups fostering polarisation, extremism and division.

Manually searching popular social media platforms, we identified a variety of accounts, pages, groups, hashtags and content that promote or support proscribed groups linked to Northern Ireland-related Terrorism (NIRT). The UK government’s list of proscribed NIRT groups includes the Irish Republican Army (IRA), Ulster Volunteer Force (UVF), Loyalist Volunteer Force (LVF), Irish National Liberation Army (INLA), Ulster Defence Association (UDA), Ulster Freedom Fighters (UFF) and Red Hand Commando (RHC).

The entities identified by ISD during this research exercise were:

- 16 Facebook pages and (public) groups that featured content promoting the IRA, UVF, INLA, UDA and the LVF.

- 7 Instagram accounts that featured content promoting the IRA, UVF and the UDA.

- 6 TikTok hashtags and 10 TikTok accounts that featured content that occasionally expressed support for the IRA, UVF, RHC, UFF and the UDA.

We found that outright promotion of these proscribed groups is rare but still present on these platforms. More commonly, we found:

- Content that celebrates past events or atrocities carried out by these groups;

- Content from users that references sectarian attacks on their community by one proscribed group and uses this to express sympathy and support for a retaliatory attack by another proscribed group; and

- Content that depicts offline material which promotes a proscribed group (photos of Republican or Loyalist murals, for example).

- Intersections between the promotion of NIRT and right-wing and Islamist extremism, through the use of memes that combine extremism and internet culture and the ‘terrorwave’ aesthetic.

- Content that promotes proscribed NIRT groups frequently appears alongside content that doesn’t explicitly promote these groups. Due to historical and cultural interest in The Troubles, movie clips, video games and news footage referencing NIRT is popular on social media, but this appears to allow NIRT-supporting content to also slip through the net through an enforcement gap of platform policies. This finding illustrates the significant content moderation challenge this poses for platforms, as evidenced below.

Glorification of Past Violence

Our analysis revealed that the most common variety of content celebrated and glorified past violence, attacks and murders committed by proscribed groups in Northern Ireland. This was particularly evident on Facebook where ISD identified 16 Facebook pages and (public) groups that featured such content. These included 8 pages/groups that featured support for proscribed Republican/Nationalist groups and 8 pages/groups that featured support for proscribed Loyalist/Unionist groups.

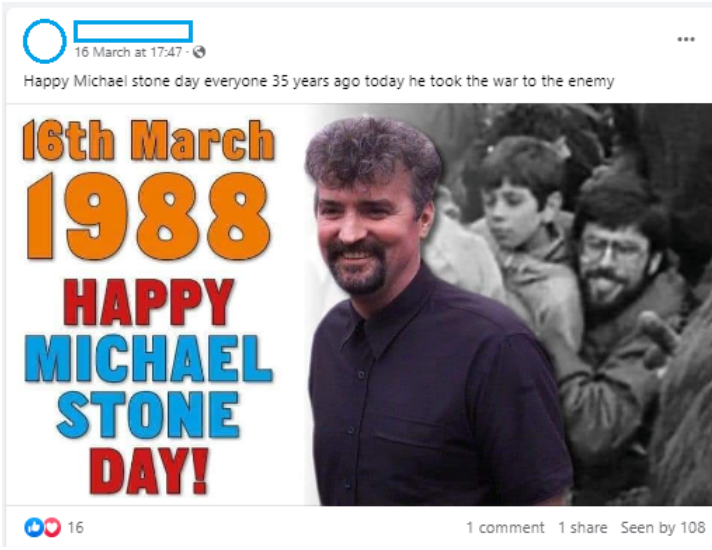

On these pages and groups, Facebook users typically praise or express support for members of or people linked to these proscribed groups, who carried out violence or acts of murder inspired by sectarian ideologies. For example, 3 Facebook pages/groups identified by ISD contained content praising the actions of Michael Stone, a former member of the UDA who killed three people in a gun and grenade attack in west Belfast during an IRA funeral on March 16, 1988. For example, a post on March 16, 2023, which contained a photo of Stone, was titled “Happy Michael Stone Day.”

Image 1: Facebook post celebrating Michael Stone, ex-member of the UDA.

Conversely, content celebrating retaliatory attacks by proscribed groups was also recorded by ISD. Content such as this fosters continued division, polarisation and acrimony and should be regarded as support for violence and sectarian hatred.

Use of Memes

Memes, often incorporating videos or images taken from news footage or TV/movies about The Troubles, are also used to express support for proscribed groups in Northern Ireland. This tactic is also commonly used by right-wing extremists and Islamist extremists, highlighting the convergent nature of tactics employed by terrorist-endorsing communities.

This tactic was popularised by the emerging ‘alt right’ movement in the early 2010’s and have subsequently become a dominant medium for propagandising across terrorist and extremist communities. The production of memes in the context of Northern Ireland highlights how support for proscribed groups in the region continues to evolve over time, fusing with online communities and meme subcultures that combine extremism and internet culture. This was particularly evident on Instagram where ISD identified 7 accounts that featured content promoting proscribed groups, 3 accounts that featured support for proscribed Republican/Nationalist groups, and 4 accounts that featured support for proscribed Loyalist/Unionist groups.

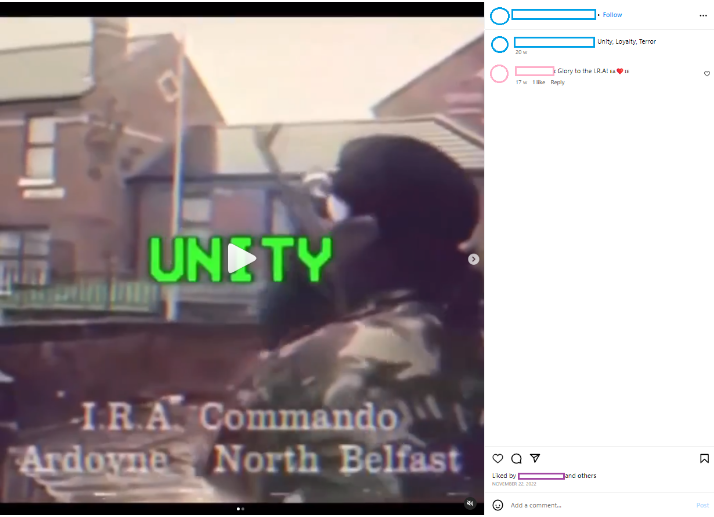

For example, ISD discovered numerous posts praising the actions of the IRA through ‘terrorwave’ video edits. This is a popular aesthetic used predominantly by right-wing extremist communities that makes use of retro-style video effects, usually mixed with synthesizer-based electronic music and bold, neon on-screen text, to present videos as something akin to old VHS footage. As part of a wider piece about ‘terrorwave,’ the Global Network on Extremism and Technology explains that this is a visual aesthetic that glorifies militancy and terrorism. In our sample, videos captioned ‘Unity. Loyalty. Terror’ contained footage of IRA militants firing weapons, as well as footage of bomb explosions and training procedures.

Image 2: Instagram post promoting the IRA in the ’terrorwave’ aesthetic.

Content Moderation Challenges

Is content about proscribed groups permitted in any form on platforms? Facebook, Instagram and TikTok prohibit organisations or individuals that engage in or promote violence, including designated terrorist organisations. These policies extend to content that praises, supports, glorifies or otherwise promotes these entities or individuals associated with them. The platforms do however make allowances in their community guidelines for content that is educational, from news or documentary productions, satirical and/or critical.

Platforms have made efforts to recognise the public interest value in permitting some content about such groups but deciding what constitutes supportive content versus objective reporting or commentary presents a major challenge for platforms in this context. As evidenced in this analysis, platform policies regarding violent and dangerous organisations are being applied inconsistently and content that promotes or expresses support for proscribed extremist groups in Northern Ireland is allowed to remain on these platforms.

This challenge for policy enforcement was evident on TikTok where ISD identified 6 TikTok hashtags and 10 TikTok accounts that featured content that expressed support for the IRA, RHC, UVF and UDA.

Not every video using these hashtags is supportive of the proscribed group referenced however, illustrating the challenge of moderating content in this context. ISD reviewed the top ten videos displayed on TikTok for each of the six hashtags and found that, in a sample of 55 videos (one of the hashtags comprised only 5 videos) 58% did not express support for a proscribed group.

| Hashtag | No of videos reviewed | No. of videos that expressed support for a proscribed group |

| #IrishRepublicanArmy | 10 | 2 |

| #ProvisionalIRA | 10 | 1 |

| # UlsterVolenteerForce | 10 | 3 |

| # UlsterVolunteerForce | 5 | 5 |

| #RedHandCommando 🇬🇧🇬🇧 | 10 | 6 |

| #UlsterDefenseAssociation | 10 | 6 |

Table 1: Shows the number of TikTok videos that expressed support for a proscribed group

Most videos did not explicitly express support for the relevant proscribed group. Instead, content was typically educational, historical or unrelated to glorifying conflict, as seen with most of the content tagged under the hashtag #IrishRepublicanArmy (with over 15 million views). These terms have uses beyond their links to the proscribed groups of Northern Ireland, highlighting another content moderation challenge for platforms.

For example, the Irish Republican Army (IRA) is a name used by various paramilitary organisations on the island of Ireland throughout the 20th and 21st centuries but it was first the name of the army, often now referred to as the “old IRA,” that fought in the Irish War of Independence from 1919 – 1921. Most videos tagged with #IrishRepublicanArmy that were reviewed by ISD featured content about the Old IRA and/or content about Ireland’s War of Independence, in the form of movie, news or documentary clips.

In another example, the hashtags #UlsterVolenteerForce and #UlsterVolunteerForce feature content about flute and marching bands which are not related to the paramilitary UVF. Yet ISD was still able identify videos using these hashtags which displayed support for the proscribed groups and their role during The Troubles, alongside content unrelated to promotion of these groups. What’s more, keyword searches from terms like “Irish Republican Army,” “IRA,” “Ulster Volunteer Force” and “UVF” all return videos that contain content expressing support for these proscribed groups.

This highlights a significant content moderation challenge for TikTok and raises concerns about potential gaps between platform policies and their enforcement. It is understandable that these terms have cultural use beyond explicit promotion of these groups but the same terms refer to groups that have been proscribed by the UK government for activities including committing, participating, preparing, promoting, encouraging or glorifying terrorism.

Conclusion

Although this analysis does not provide a comprehensive evidence base across the social media ecosystem, it does reveal the presence of NIRT-endorsing communities across Facebook, Instagram and TikTok. There are evident challenges in identifying and analysing this material, in part due to the presence of anodyne or factual material associated with the discussion of some of these groups as well as emergent online communities that create content in support of paramilitary and terrorist violence often overlaid with ironic nods to music, graphic design and other retro aesthetics that are prevalent in wider meme culture.

We can’t necessarily know the intent behind a specific piece of content, meaning these dynamics make analysis of such content very difficult for platforms and researchers alike. Nevertheless, ISD still identified clusters of activity that are likely in breach of platform terms of service relating to support for proscribed organisations.

This is potentially linked to the fact that NIRT has been superseded in attention by other terrorist threats in recent years and suggests an apparent inconsistency in the application of terms of service on social media platforms. This inconsistency is creating permissive environments for people who genuinely believe in violence or support paramilitary groups to operate with minimal friction online and effectively slip through the net with little fuss.