How Creators on Tiktok are Using Their Profile Details to Promote Hate

24th August 2021

A new ISD report aims to provide an in-depth analysis on the state of extremism and hate on TikTok. It is the culmination of three months of research on a sample of 1,030 videos posted on TikTok, equivalent to just over eight hours of content, that were used to promote hatred and glorify extremism and terrorism on the platform. The analysis of the TikTok content featured in the report was conducted between July 1 – 16, 2021.

This dispatch is an excerpt from ‘Creators, Profiles and TikTok Takedowns‘, a chapter in the report which looks at how TikTok creators use their profiles to encourage hate and extremism.

_________________________________________________________________________

491 unique TikTok accounts (i.e. creators) were analysed during the research, 78 of which included more than one profile feature that referenced hate or extremism in their profiles. Those features were:

| Profile Feature | No. of accounts |

| Username | 71 |

| Profile image | 31 |

| Nickname | 57 |

| Profile biography | 27 |

Below is a breakdown of references that were amongst the most popular in our sample.

➜ 26 profiles featured “88”, a popular white supremacist numerical reference to “HH”, or “Heil Hitler”.

➜ 24 profiles featured the SS bolts, a common neo-Nazi symbol that references the Schutzstaffel (SS) of Nazi Germany. In particular on TikTok, these were typically referenced using lightning bolt emojis – i.e. ⚡️⚡️.

➜ 16 profiles feature versions of the word “fascist” or references to famous fascists in profile images or Biographies.

➜ 16 profiles feature the Sonnenrad in their profile Image.

➜ 15 profiles featured “14”, another popular white supremacist reference for the “14 words”.

➜ 9 profiles feature the terms “national socialist” or “nat soc” in one of their profile elements.

➜ 6 profiles reference Brenton Tarrant and the Christchurst terrorist attack in their profile image.

➜ 6 profiles feature references to Paul Miller through numerous profile elements.

➜ 4 profiles feature the logo for the Atomwaffen Division in their profile image.

➜ 4 profiles feature Oswald Mosley in their profile Image.

➜ 4 profiles feature antisemitic slurs and references to Holocaust denial

➜ 4 profiles feature images of prominent Nazi collaborators from World War II, such as Leon Degrelle, Ion Antonescu or Jonas Noreika.

➜ 4 profiles contained “🤚🏻” in one of their profile elements, which were typically used as a reference to white power and white supremacy.

➜ 3 profiles feature George Lincoln Rockwell in their profile image.

➜ 2 profiles feature references to “Remove Kebab”, also known as Serbia Strong, an anti-Muslim propaganda song written during the Yugoslav War in the 1990s.

➜ 1 profile features a biography that contains a link to an article promoting Black-on-white crime.

Separate to direct and obvious references to hate and extremism, some profiles also featured coded terms and references that are intended to be understood by a small circle of like-minded people. These included one profile that featured the fictional name “Nating Higgers”, where the first letter of each word is meant to be swapped around to reveal the true racist meaning of this reference.

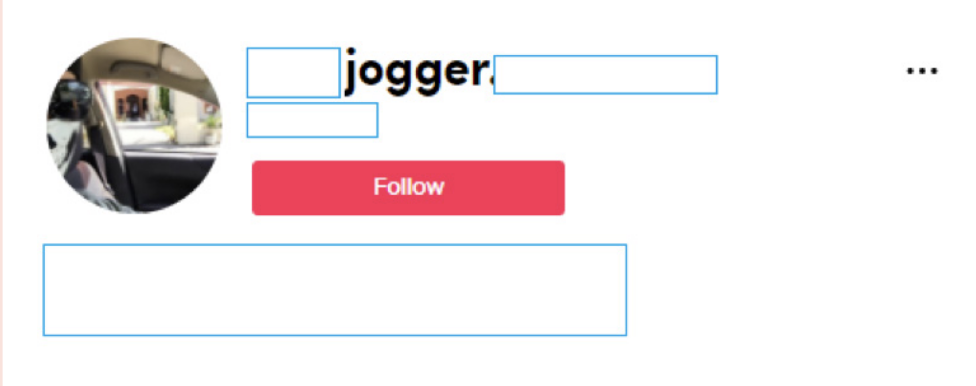

Another profile, seen in Fig. 25, references “jogger exterminator” as a username. This is a racial slur used to describe people of colour that originated as a meme on 4chan following the fatal shooting of Ahmaud Arbery in the US in February 2020. What’s more, this account not only uses this term, but also contains two other known white supremacist terms. And lastly, the profile image features a selfie photo taken by an alleged member of a white nationalist group outisde Al Noor mosque close to the first anniversary of the Christchurch terrorist attack. The account is still live, follows hundreds of accounts, and while it is public, it has not posted any video content. It could be active in posting comments on other user’s videos, but is not possible to manually search for comments from a specific user through the TikTok platform or API to confirm this.

Fig. 25: A TikTok account that contains numerous references to hate and extremism in its profile details.

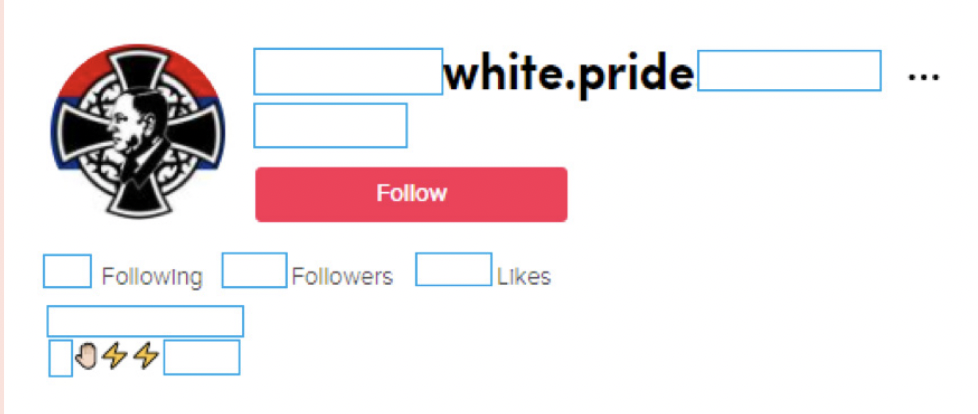

Extremist creators on TikTok routinely make use of all the features of their profile to promote hatred and likely signal to ideologically-similar users what their interests are. For example, the screenshot seen in Fig. 26 features numerous extremist references. The username contains the white supremacist slogan “white pride”, the profile features an image that includes a flag displaying support for Milan Nedić, a Serbian Nazi collaborator, and the biography features two lightning bolt emojis, likely references to the SS, and a white hand emoji, another likely white supremacist reference. The account has posted numerous videos promoting hatred by way of glorifying famous fascist politicians and war criminals, promoting the Ku Klux Klan and featuring antisemitic content. The account is still live.

Fig. 26: This TikTok account features numerous extremist references.

Analysis

It is unclear what policies TikTok has on the promotion of extremism or glorification of terrorism through profile characteristics. Each of the insights and examples above raises questions about the ease with which explicit or implicit support for extremism and terrorism is allowed through TikTok profiles. These examples suggest that TikTok has failed to to take action against content that is not explicit in its support for extremism or terrorism, particularly those that convey support via symbols and emojis. This may prove to be a significant gap if the insights from this sample are extrapolated. How do we determine whether a reference featured in a profile is supportive of hatred or extremism? In most cases there are clear and obvious references in text or photos, such as admiration of a high profile extremist, extremist ideology or a known slur about a group of people. In other cases, it’s vital to consider the full nature of an account to understand the reference.

Some TikTok accounts featured photos of known Nazi collaborators, which, along with videos that glorified other central Nazi figures like Hitler or Himmler, could be interpreted as an expression of support.There are exceptions, and indeed, not all accounts discovered by ISD that included such references were selected for analysis. The intricacies of classifying these types of profiles suggest that content moderation judgements should be based on an account’s full activity, rather than account characteristics in isolation. For TikTok, one of the lessons still to be learnt is that obvious terms and veiled or coded references to hateful terms, “out groups” or extremists are used to signal support for hateful and extremist ideologies. These factors should be included in account reviews when considering whether or not to issue strikes against an account, if this is not already being done.

Of the 1,030 videos analysed as part of the research, 18.5%, or almost one fifth, are no longer available on TikTok. The majority of the removed videos come from accounts that are also no longer live on TikTok. Of the 461 accounts captured during this research, almost 13% are no longer active and have been deactivated or banned. It is encouraging to see TikTok taking action against some such activity and actors, yet the vast majority of hateful and extremist content and accounts identified during this research are still live.

This is an excerpt from ‘Hatescape An In-Depth Analysis of Extremism and Hate Speech on TikTok‘, a new ISD report that aims to provide an in-depth analysis on the state of extremism and hate on TikTok.