How female politicians were targeted with abuse on TikTok and Instagram ahead of the 2022 US midterms

12 December 2022

By: Zoé Fourel and Cécile Simmons

_________________________________________________________________________________

Introduction

A growing body of research has followed the global increase in gender-based mis- and disinformation targeting women running for public office. During the 2020 American presidential elections, ISD found that women candidates were almost twice as likely to face abuse on Twitter as their male counterparts and were often subjected to attacks. This same study found that women from ethnic minority backgrounds faced particularly high levels of abuse. Research from the Center for Democracy & Technology corroborated ISD’s research, finding that women candidates of were twice as likely to be targeted with mis- and disinformation, and are more likely to receive certain forms of online abuse, including violent threats.

In 2022, ISD has once again found evidence that social platforms, specifically multimedia-first TikTok and Instagram, have failed in consistently enforcing their own policies to safeguard female political figures and prevent the spread of harmful, abusive content.

Online abuse is falling through the cracks of policy and moderation. The consequences include potentially discouraging women, especially from ethnic minority backgrounds, from running for office in the future. This in turn threatens progress on diversity and representation in politics.

This investigation aimed to understand the role played by hashtag recommendations on TikTok and Instagram in the amplification of gender-based abuse of women in the US political arena ahead of the 2022 midterms.

This Dispatch is a summary of a long-form investigation, available now on ISD’s website: Hate in Plain Sight: Abuse Targeting Women Ahead of the 2022 Midterm Elections on TikTok and Instagram

Methodology

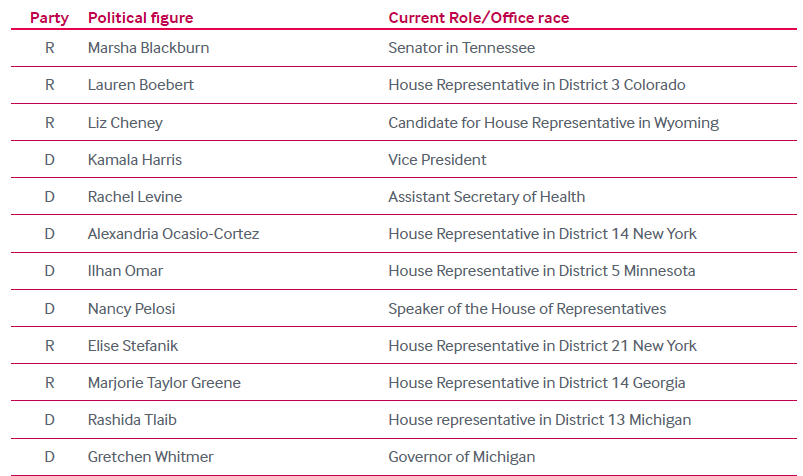

ISD curated list of women in public office in the US. Women were selected based on party affiliation and similar levels of following as well as identity-based criteria.

Table 1: Summary of the list of candidates selected for this research (D = Democrat; R = Republican).

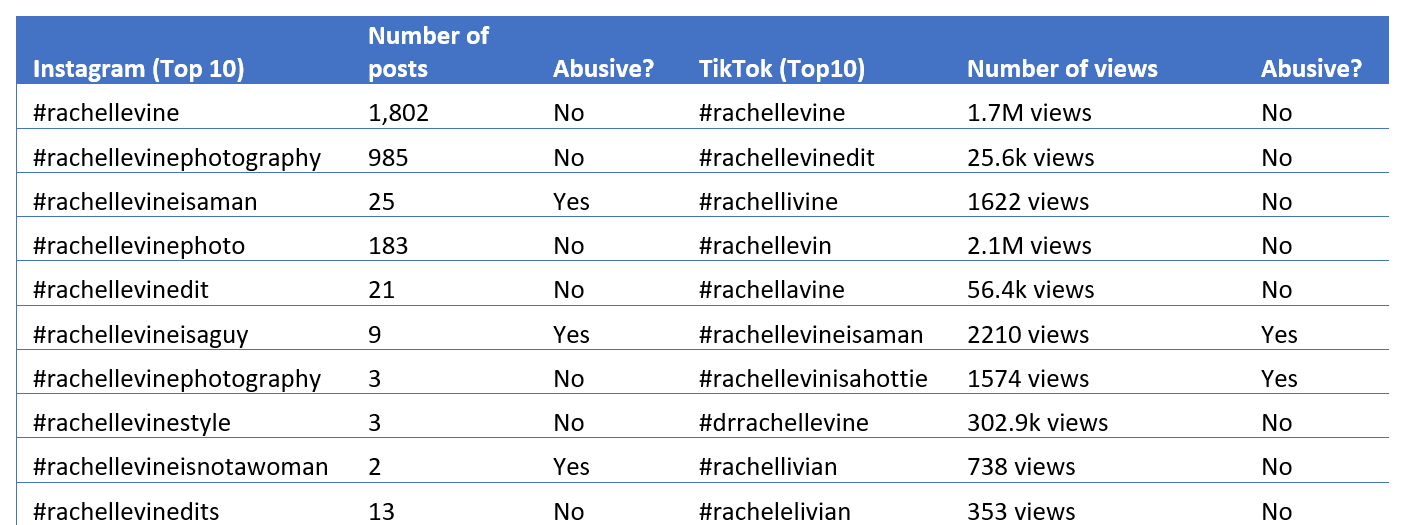

ISD collected the first 10 hashtags suggested by TikTok and Instagram when searching for each candidate. Hashtags were classified as abusive versus non-abusive, and ISD analyzed the ten first suggested posts for all hashtags that were classified as abusive (see Table 2). Our findings are outlined below.

ISD identified several hashtags suggested by Instagram and TikTok promoting abuse against female political figures

Firstly, ISD found that abusive hashtags were in the 10 first suggested hashtags on both platforms in the case of all 12 candidates (the only exception being Alexandria Ocasio-Cortex on TikTok). Hand ISD observed abusive hashtags with few views or mentions prioritised in recommendations above more popular, non-abusive hashtags. For example, transphobic hashtags misgendering were featured three times in the first 10 recommended hashtags on Instagram and were ranked above non-abusive hashtags with more views (see table 2 below).

Table 2: Summary of top hashtags on Instagram and TikTok for Rachel Levine.

In multiple instances, ISD identified gender-based abuse; women at the intersection of multiple marginalized identities were particularly targeted

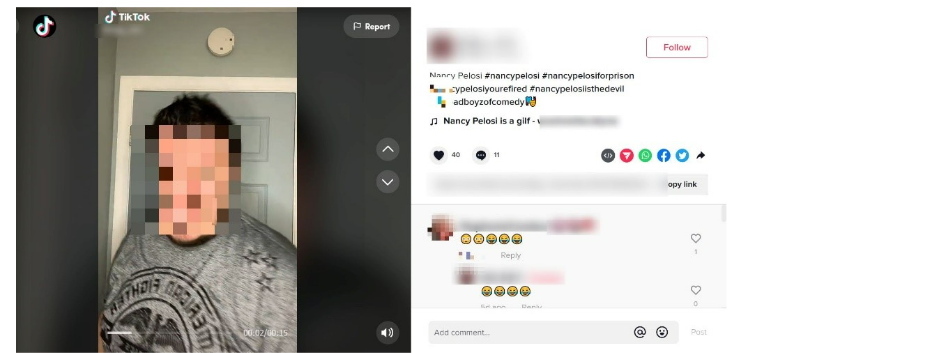

ISD found misogynistic language and attacks directed at women in the posts recommended under the hashtags suggested by both Instagram and TikTok, regardless of political affiliation to Republicans or Democrats. ISD found multiple posts targeting women with misogynistic slurs and dehumanizing language. Sexual content depicting both Republican and Democrat women was observed on both platforms, and was frequently featured in memes, videos and audios. On TikTok, analysts found the same audio containing sexual lyrics targeting Nancy Pelosi was used in multiple recommended videos.

Figure 1: Example of a TikTok video featuring sexual content in the audio targeting Nancy Pelosi.

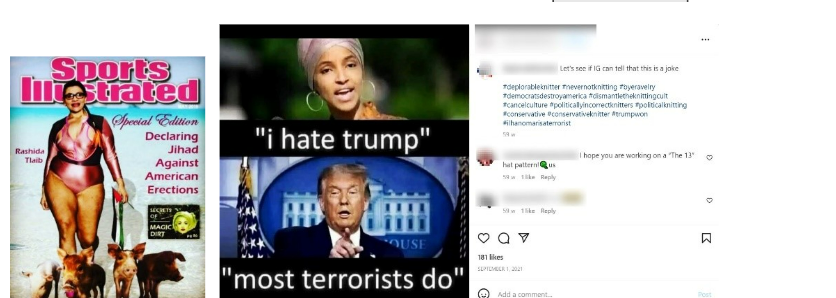

Candidates with intersecting identities were targeted by highly abusive content targeting multiple aspects of their identity, including gender, gender identity, religion, race and national origin.

For example, ISD found that representatives Ilhan Omar and Rashida Tlaib were subject to specific forms of abuse and were accused of supporting terrorist organizations or being a foreign agent.

Figure 2 and 3: Examples of Instagram posts targeting Rashida Tlaib and Ilhan Omar.

ISD also found transphobic content targeting Democrat Rachel Levine. In fact, a large proportion of targeting Levine under hashtags classified as abusive were transphobic. This included dehumanizing language and multiple posts misgendering Levine or using her deadname.

Figure 4: Example of a TikTok post misgendering Rachel Levine.

Throughout this investigation, ISD identified examples of gendered disinformation or weaponization of sex-based narratives to attack women. For instance, multiple posts claimed Ilhan Omar had “married her brother”, dehumanizing and sexual content was often shared when referencing this narrative.

Figure 5: Example of an Instagram post targeting Ilhan Omar with gendered disinformation and sexual content.

Content under promoted hashtags went against platforms’ policies, disregarded by platforms

ISD identified potential breaches to Instagram and TikTok’s policies throughout the investigation. Meta’s terms of service specifically cover a broad range of online harms, including hate speech, and bullying and harassment. TikTok community guidelines, on the other hand, prohibit hate speech or content involving hateful behaviour, hateful ideologies and sexual harassment. Nonetheless, ISD identified multiple instances of potential violations. This was particularly evident in audio and image-based content, which exemplifies the necessity for platforms to address gaps in implementing their own policies on all features.

When looking at specific examples, the audio targeting Nancy Pelosi would fall under TikTok’s sexual harassment policy that prohibits “content that simulates sexual activity with another person, either verbally, in text (including emojis), or through the use of any in-app features.” The memes claiming Ilhan Omar is a terrorist or suggesting she supported 9/11 on Instagram are a potential violation of Meta’s hate speech policy as the platform prohibits “dehumanizing speech or imagery in the form of comparisons,” including to criminals, based on a protected characteristic. Moreover, ISD identified posts promoting conspiracy theories alongside the hashtags, including -related conspiracies. In these cases, the platforms themselves were promoting the viral hashtags and categorizing content under them, potentially violating their own policies. This was the case with a video tagged with another targeting Nancy Pelosi (#nancypelosiforprison), potentially violating Instagram’s COVID-19 and Vaccine Policy Updates & Protections policy. Similar observations were made for posts dealing with disinformation about the outcome of the 2020 elections, the January 6 Capitol attack, and voter fraud claims in relation to Democratic candidates, all potentially breaching Meta’s mis/disinformation policies.

Figure 6: Example of an Instagram video which features disinformation and conspiracy theories on COVID-19 and vaccination. This post was not labelled by Instagram as sharing COVID-19 information.

Figure 7: Instagram post with a flyer for a rally in Michigan asking for a ‘Forensic audit of 2020 election’. This post was recommended on Instagram under the hashtag #gretchenwhitmerisatyrant

Conclusion

This investigation illuminates platforms’ responsibility towards female political figures and their failure to enforce their own policies on content targeting women in the US political arena

Both Instagram and TikTok recommended abusive hashtags to users searching for women candidates or political figures in the 2022 midterm elections. These abusive hashtags promoted hateful content targeting candidates, likely a direct violation of platform policies.

Based on this investigation ISD formulated several recommendations:

- Platforms need to ensure better implementation of their terms of service in relation to abuse and harassment policies.

- Social media platforms need to provide greater transparency about their content moderation policies, processes and enforcement outcomes relating to harassment and abuse, including the financial, human and technical resources allocated to content moderation.

- Platforms need to design and deploy content moderation systems that are reflective of how different platform features (image, video, audio etc.)

- Platforms need to do more to prevent the amplification of abusive content targeting women in office by reviewing recommended hashtags associated with prominent public figures. This investigation found that platforms routinely recommended abusive hashtags in association with the names of female political figures.

- Platforms need to regularly review and update their content moderation policies to reflect new patterns of abusive, harmful and illegal content, and ensure that human moderators receive appropriate and up-to-date training on detecting and assessing abusive content targeting high-profile women.

- Social media platforms need to put in place enhanced measures to address abusive and sexualized content aimed at candidates and women in office during electoral cycles.

[1] While we aimed to select candidates from a range of backgrounds, analysts prioritized party diversity and were not able to include Republican candidates from ethnic minority backgrounds.