It is (still) shockingly easy to find terrorist content on TikTok

By Ciaran O’Connor and Melanie Smith

16 March 2023

An ISD investigation conducted over the course of only a few hours found that content related to the 2019 Christchurch terrorist attack remains easily accessible on TikTok. On the fourth anniversary of the attack and only one week from TikTok CEO Shou Zi Chew testifying before the House Energy and Commerce Committee, ISD explores the platform’s failure to commit to enforcing its own policies.

Almost two years ago, ISD published a report titled ‘Hatescape: An In-Depth Analysis of Extremism and Hate Speech on TikTok’ (2021), which provided an in-depth analysis of the extremist, terrorist and hateful content available on the platform at the time. One of the major findings from this 2021 report was that videos glorifying the Christchurch terrorist attack in 2019 were easily discoverable on TikTok.

Over the course of three months of research, ISD identified 30 videos expressing support for the actions of the perpetrator, Brenton Tarrant. These included 13 videos that contained content originally created by Tarrant and three videos that featured video game footage designed to recreate the attack.

On the fourth anniversary of the Christchurch terrorist attack, ISD decided to repeat this exercise.

This time, over the course of only one day of research, ISD identified 53 pieces of content that featured support for Brenton Tarrant. ISD categorised content as supportive if the TikTok video, added on-screen text or the accompanying caption used in the post by the user praised, promoted, glorified, discussed positively or uncritically mentioned Tarrant. Throughout the course of the monitoring period, though not the focus of this 2023 review, ISD also noted the presence of numerous TikTok videos and account profiles supporting the actions of other racially motivated mass shooters such as Dylann Roof and Anders Behring Breivik, as well as extremist ideologies like white supremacy and Holocaust denial.

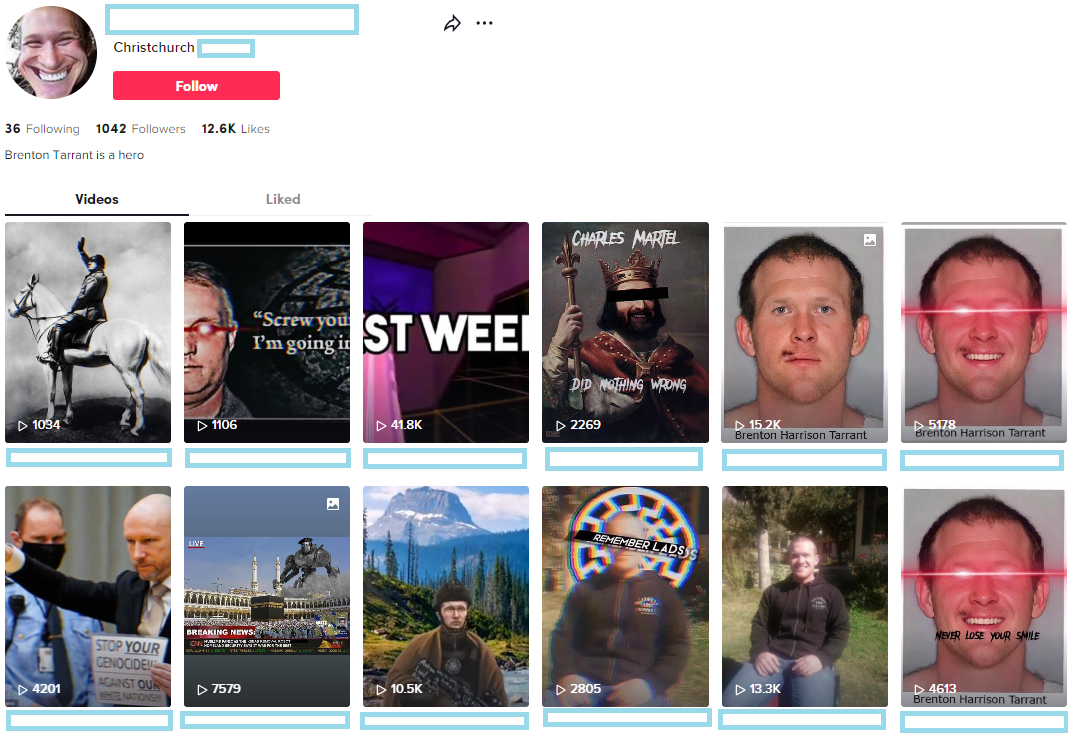

The account responsible for the most highly viewed video in this set (13.1k views) features audio from the livestream of the attack and uses a photo of Tarrant as its profile image. This user also referred to themselves as a Christchurch ‘troll’ in their username and included the phrase “Brenton Tarrant is a hero” in their bio. The account has over 1000 followers and is still live. In another instance, an account named itself Dylan Klebold, one of the perpetrators of the 1999 Columbine school shooting, and used a photo of Klebold as its profile image. This account posted footage from the Christchurch terror attack on TikTok in December 2022. The post is still live.

Content that glorifies mass violence and the individuals who commit atrocities in furtherance of ideological goals is a pillar of the propaganda playbook deployed within racially motivated violent extremist communities online, and is often distributed with stated or apparent hopes of inspiring the next would-be killer in waiting. The glorification of killers offers a prospective purpose to those it seeks to radicalize: that murdering members of minority communities will earn them a hero treatment outlasting their time on Earth. Such content has been explicitly connected to acts of mass violence as recently as last year.

This data supports the finding that footage related to the 2019 terrorist attack is still easily discoverable on TikTok. Perhaps more concerning is that clear promotion and glorification of terrorists and the spread of extremist ideologies on TikTok does not appear to have improved over the course of almost two years. While these activities are strictly prohibited by the platform’s community guidelines, ISD’s 2023 review suggests the platform is still failing to enforce these guidelines and putting users at risk in the process.

While the sample forming the core of this review is small, so was the monitoring period – these 53 pieces of content were discovered in a matter of hours using simple searches. Moreover, TikTok’s research API is still in its infancy and only available to researchers at some US-based universities, though the program is expanding. An effective API that is accessible to researchers across the globe has yet to be released, meaning that much TikTok analysis must still be conducted manually in order to comply with the platform’s Terms of Service.

This restrictive approach to transparency and accountability means that researchers, journalists and even governments across the world remain in the dark about aspects of how TikTok functions as a platform, including the influence of its algorithm, questions over user data and, as evidenced here, the presence of dangerous and harmful content. These are likely to be the major lines of questioning faced by TikTok CEO Shou Zi Chew as he prepares to testify on the Hill on March 23.

Findings

The 53 pieces of content can be broken down into 33 TikTok posts and 20 TikTok account profile features (username, profile image, biography, etc.)

Of those 53:

- The most viewed video, with 13.1k views, features audio from the livestream of the attack and was posted by an account that uses a photo of Tarrant as its profile image. The same account includes the phrase “Brenton Tarrant is a hero” in its bio and has just over 1000 followers. However, across the 10 videos posted by this account, it has received over 105k total views. It is possible that TikTok’s algorithm has helped to amplify this content.

- 16 of the videos feature content originally created by Tarrant. These consist of either video footage taken from the livestream broadcast of the attack, photos taken by Tarrant in his car prior to beginning the attack, or segments of Tarrant’s manifesto. This is an increase from the 13 posts captured in ISD’s 2021 ‘Hatescape’ report.

- A further 15 videos feature content created by others that support or glorify Tarrant, consisting of other footage of Tarrant, use of the Saint Tarrant meme and content incorporating images of the Al Noor mosque in Christchurch. Two feature video game footage reenacting the attack.

- Of the 20 TikTok profile features uncovered, 12 use an image of Tarrant sitting in his car prior to beginning the attack as the account’s profile image. Eight use other photos of Tarrant (not from the day of the attack) as their profile image, or use Tarrant’s name in the account’s username or nickname Combined, these accounts had a following of just over 6k users.

- Multiple TikTok accounts that produced videos featuring Tarrant or footage from the Christchurch attacks also glorified other mass shooters, such as Anders Behring Breivik and Dylan Klebold, in both videos and their usernames. Other accounts discovered but which were not the focus of this analysis featured photos of Dylann Roof and other terrorists.

- Of the 33 TikTok posts, 28 were published on the platform between January – March 2023, while the rest were published last year. Some of these posts date back to August 2022, again raising the question of how they have not been detected and removed by TikTok.

Image 1: TikTok account featuring image of and reference to Brenton Tarrant in its profile, along with various content supportive of Tarrant and other terrorists throughout its posts.

Community Guidelines Enforcement, Violations and Evasion

The analysis of the TikTok content featured in this report was conducted on 15 March. All references to the status of TikTok content being live or removed were accurate as of this date. At the time of writing, all but one of the 33 TikTok posts are still live, including some that show moments of death. This is a clear and direct violation of TikTok’s guidelines on violent and graphic content.

Aside from content directly created by Tarrant (i.e. footage from the original livestream of the attack, photos, or his manifesto), all other content in TikTok posts supporting or glorifying Tarrant or account profile features using his photo or name is also in violation of TikTok’s guidelines on violent extremist organizations and individuals.

These guidelines prohibits content that “praises, promotes, glorifies, or supports violent acts or extremist organizations or individuals” or content with “names, symbols, logos, flags, slogans, uniforms, gestures, salutes, illustrations, portraits, songs, music, lyrics, or other objects meant to represent violent extremist organizations or individuals.”

Content referencing Tarrant takes many forms on TikTok. Searches for “Brenton Tarrant” will return no results but generates a notice that informs users that “this phrase may be associated with hateful behavior.” However, ISD found this approach to be largely ineffective; searches for “Tarrant Brenton” or formulations using different keywords related to the attack alongside “video” returned numerous TikTok posts containing violative content.

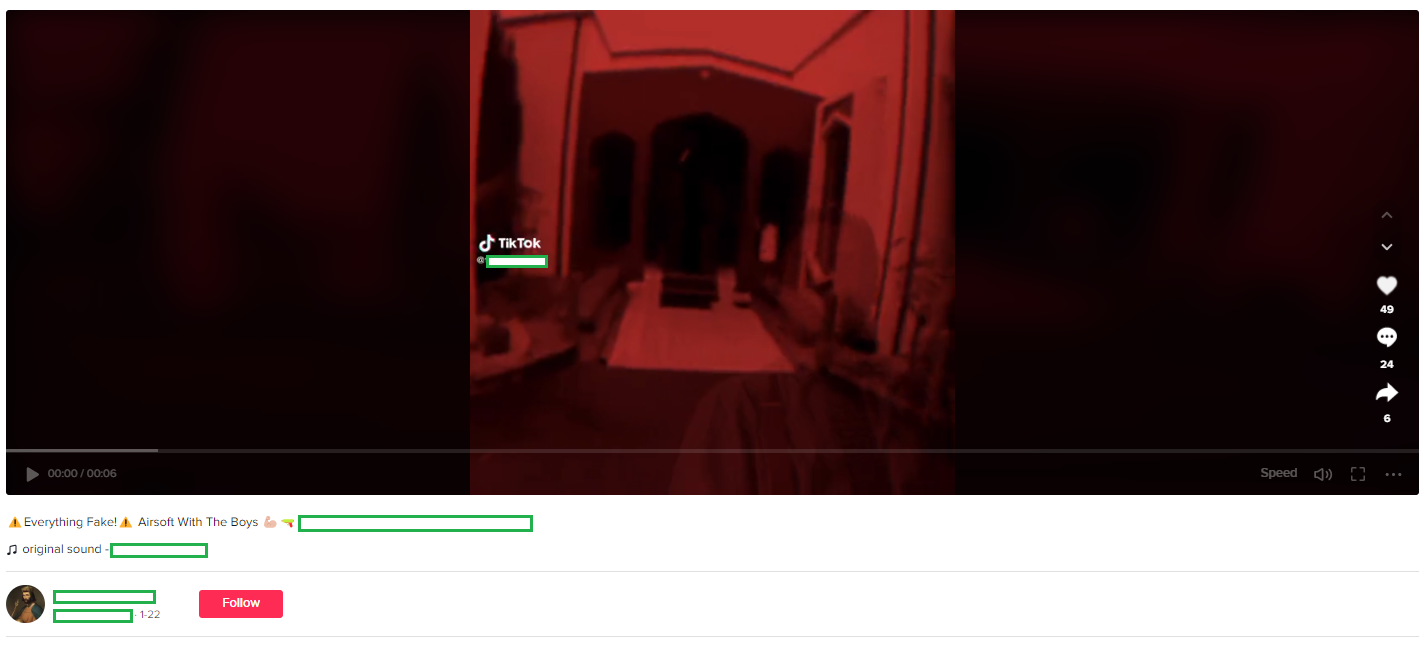

A number of posts and hashtags also used terms like “fake,” “for educational purposes” or “Airsoft” in an attempt to present their post as unrelated to terrorist content. Others used misspelled terms linked to the attack; a move that was likely deliberately used to avoid platform bans and is common across violative TikTok content.

Image 2: This post features footage from the video of the attack and was uploaded to TikTok in late January. The post caption described the video as ”fake” and containing ”Airsoft” footage.

Lastly, some TikTok posts and users shared information stating that other content or even other accounts belonging to them had previously been removed or banned by TikTok for violating the platform’s community guidelines. For activity related specifically to promoting or featuring terrorist content, there is apparently little resistance faced by these users in returning to the platform. This represents a glaring and inexcusable failure by TikTok to protect other users.

Recommendations

In our 2021 report, ISD included a list of recommendations for TikTok, based on the research conducted at that time. Unfortunately, with respect to this 2023 review, a number of these recommendations remain the same.

With renewed urgency, we recommend that TikTok ensure the removal of terrorist content from the platform must be a top priority and, at the very least, a basic requirement for content moderation on the platform. As the research indicated back in 2021 and shows once again in 2023, terrorist content is still allowed to be posted and remain on the platform for some time. This must change.

ISD’s 2021 report detailed how extremist TikTok creators used their profile features to signal support for extremists and terrorists and recommended TikTok improve its understanding of how extremist creators use every aspect of their profiles to spread hatred on the platform. As evidenced in this review, this remains a challenge. Social media platforms must heed the warnings about gaps in both understanding and enforcement from researchers with considerable expertise on these issue sets.

The evasion tactic of using misspelled terms or hashtags related to terrorist and extremist attacks was highlighted in 2021 and even prior to that in other ISD research from 2020 about the spread of QAnon conspiracy theories. ISD has repeatedly called on TikTok to evolve its policies beyond narrow hashtag and keyword bans yet, once again, it appears users are exploiting this enforcement gap with ease.

TikTok’s research API is still being rolled out to researchers in the US but has yet to be released globally. As in 2021, ISD still recommends TikTok prioritize data access for researchers, with all necessary privacy protections in mind. Access to the API is still controlled by the platform and research proposals require approval by TikTok’s US Data Security division, meaning some roadblocks remain in place.

Conclusion

Terrorist content is still easily discoverable on TikTok. As evidenced through this brief case study, based on a sample of just 53 pieces of content, posts and account data that support and glorify the 2019 Christchurch terrorist attack and its perpetrator are readily available. This content remains on the platform for weeks and months at a time, indicating that the platform is still failing to enforce its most basic policies regarding violent and graphic content.

TikTok has taken steps to enhance its transparency and accountability measures, as well as challenges facing its content moderation policies and practices, and these should all be welcomed. However this 2023 review has similarly uncovered evidence that highlights a considerable enforcement gap in the platform’s application of its policies.

TikTok, and indeed all major mainstream social media platforms and technology organizations with skilled workforces and significant resources to hand, must always strive to make their online, digital spaces safe and free from harmful and hateful content. Until this is the case, we must use the resources at our collective disposal – including public testimonies from platform CEOs like Shou Zi Chew – to push for improved policies and practices.