Unprompted and Unwarranted: YouTube’s Algorithm is Putting Young Men at Risk in Australia

10 May 2022

By Elise Thomas

What kind of content is YouTube recommending to young Australian men? With the support of Reset Australia, my colleague Kata Balint and I recently embarked on a small study to answer this question.

The initial focus of the study was to examine algorithmic amplification of far-right and extreme content, but as our research progressed a different – and disturbing – pattern began to emerge.

This Dispatch is based on the findings from our report, Algorithms as a Weapon Against Women: How YouTube Lures Boys and Young Men into the ‘Manosphere’, and the journey getting there.

_________________________________________________________________________________

Our study centred on ten YouTube accounts, which we created to pose as young Australian men and teenage boys. Half of the accounts were set up as adults, the other half as minors. Eight of the accounts were set to follow accounts from across the ideological spectrum, from mainstream right to far-right extremism. This meant that some accounts were made to watch, like and subscribe to relatively mainstream right-wing content, such as Sky After Dark shows, while others were used to watch, like and subscribe to content from known Australian neo-Nazi groups. Two accounts were not populated with seed lists, and analysts only watched and engaged with videos recommended to them by YouTube.

We wanted to see how YouTube’s recommendation algorithms would affect the accounts – for example, whether the more ‘mainstream’ accounts would be pushed towards more extreme content.

In our study (which, as noted, was small and conducted over a short period of time), we did not find evidence of that occurring. Instead, the recommendations appeared to remain at about the same level as the initial inputs – that is to say, the more mainstream accounts were recommended more mainstream content, and the more extreme accounts were recommended similarly extreme content. A much larger study from US-based researchers, coincidentally published at a similar time to ours, arrived at the same conclusion.

We did, however, find something else. Regardless of where they were on the ideological spectrum, every single account was recommended content promoting anti-trans, misogynistic and ‘Manosphere’ views. The Manosphere is a term often used by researchers to refer to a loose collection of mostly online social movements marked by their overt and extreme misogyny, and warped perceptions of masculine identity.

Provocative content recommendations

For most of the accounts, recommendations for misogynist content flowed through a particular gateway: videos featuring Jordan Peterson or Ben Shapiro.

Peterson is a controversial Canadian author and media figure who often speaks on the topics of masculinity and gender relations. Neither his account nor name were included on any of the account seed lists, but content featuring Peterson was consistently recommended to the accounts both on the main YouTube platform and on YouTube Shorts (a relatively new YouTube product which has been described as YouTube’s ‘TikTok competitor’). Early recommendations of third-party uploads featuring Peterson included videos with titles such as, “When Entitled Women Get Humbled” and “Jordan Peterson: Why Do Nice Guys Nice [sic] Finish Last?”

Image 1: Recommended video in the study featuring Jordan Peterson.

These observations align with the findings of a 2021 study by the Queensland University of Technology, which described an “anti-feminist trend” among YouTube videos recommended in response to searches for the term ‘feminism’. The study also noted that “videos featuring the controversial public intellectual Jordan Peterson emerge as ‘winners’”.

In our study, engaging with content featuring Peterson and Shapiro appeared to serve as a gateway into recommendations for a much wider slew of anti-feminist, misogynistic, anti-trans and Manosphere content.

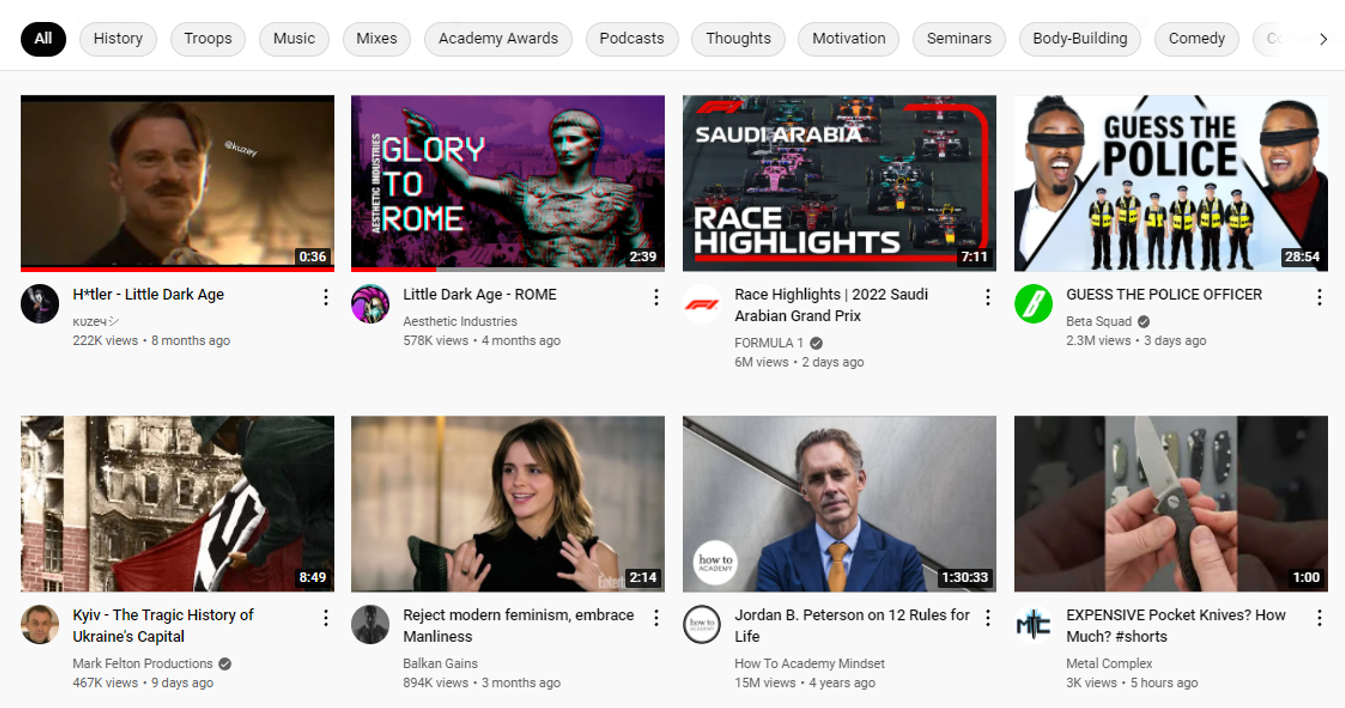

For one of the two accounts not populated with a seed list, the lack of initial input seems to have led the YouTube algorithm to be more responsive to the accounts’ behaviour. For this account, there was a relatively short pathway to recommendations involving coded Nazi glorification. This path wended its way from content featuring Peterson and Shapiro into ‘Sigma Male’ Manosphere content, and from there to fashwave aesthetic content, music edits of Nazi soldiers in WWII, Hitler fan videos and recommendations for documentaries about serial killers with female victims.

This trajectory towards extreme content only happened with one of the two accounts that were not populated with a seed list. However, it remains an interesting qualitative illustration of the significant overlap between the Manosphere and white supremacist online subcultures, and the role that algorithms can play in facilitating users’ journeys from one to the other.

Image 2: Screenshot of recommendations shown to the ‘blank’ account, including a Hitler music video, fashwave aesthetic content, Manosphere content, a video featuring Jordan Peterson, and a video about knives.

A recent survey of 16 and 17 year olds conducted by Reset and YouGov, carried out in connection with our study, found that respondents who identified as white were significantly more likely to have seen YouTube videos promoting anti-feminist narratives than respondents from other backgrounds. Male respondents were also much more likely to have seen Jordan Peterson and Ben Shapiro content than female respondents, with 25% of young men having seen Peterson content compared with just 6% of women.

It should be noted that the survey did not account for the ideological positions of respondents. It may be that male accounts which skew ideologically right, as the accounts in our research did, are even more likely to be shown Peterson content. This may explain why while a quarter of the survey respondents had been shown Peterson content, it was shown to every single one of the accounts in our study.

Great risks to an already at-risk population in Australia

Like too many countries around the world, Australia is grappling with the interlinked problems of misogyny, discrimination and gender-based violence against both cis and trans women. Australia is also facing the challenges of supporting youth mental health, including amongst young men and boys for whom ideas about masculinity, and what it means to be a ‘real man’, may play a key role in their sense of identity.

In light of this, it is concerning that YouTube’s recommendations appear to be pushing young men (and potentially young men who are already interested in right-wing ideas) towards content that promotes warped, unhealthy concepts of masculinity and disrespectful, potentially harmful attitudes towards women.

It is particularly disturbing that this happened without any prompting on the researchers’ part. We did not set out looking for this. It was simply served up, in the same way it appears to be finding a substantial proportion of young men and boys in Australia.

To be clear, this is not a call for more content moderation. We are not saying that this content should be banned from YouTube (except perhaps for the Hitler fan videos). There is however a clear distinction between allowing the content to exist on the platform and actively pushing it – unprompted – onto young users.

Recommendations for policymakers

Our report contains four recommendations for policymakers centred around: focusing on community and societal risks, as well as individual risks; stronger regulation of social media platforms’ systems and processes, rather than just content moderation; platform accountability and transparency; and strong regulators producing properly and consistently enforced regulation.

Addressing gender inequality and gender-based violence against cis and trans women requires addressing the root cause of the problem: disrespect. As ex-Australian Prime Minister Malcolm Turnbull said when he unveiled Australia’s multimillion dollar strategy to combat domestic violence in 2015, “disrespecting women does not always result in violence against women, but all violence against women begins with disrespecting women”.

These are deep-rooted social problems which defy quick fixes. However, something we can do quickly is prevent algorithms from making them worse. We need to broaden our thinking away from playing whack-a-mole content moderation and consider the systemic impacts of which content – and, therefore, which ideas and beliefs – algorithms amplify, and in turn how that shapes the communities we live in.

Elise Thomas is an OSINT Analyst at ISD. She has previously worked for the Australian Strategic Policy Institute, and has written for Foreign Policy, The Daily Beast, Wired and others.