Election disinformation thrives following social media platforms’ shift to short-form video content

4 November 2022

By Clara Martiny, Isabel Jones and Lucy Cooper

TikTok’s meteoric rise over the past three years has forced other social media platforms to prioritize short-form video content as they attempt to compete with the platform’s success. In 2020, both Meta and YouTube launched their own hubs for short-form video content: Instagram Reels and YouTube Shorts.

Since then, both platforms have made a massive effort to attract content creators and audiences alike. In July 2021, Instagram announced it would invest $1 billion in Reels creators; in September 2021, the platform announced it was testing a “Reels Play bonus” that would pay creators based on engagement. Similarly, in September 2022, YouTube announced it would monetize YouTube Shorts, running ads in a user’s feed and giving creators 45% of the revenue “from the overall amount allocated” to them.

The problem with the rapid rollout of these new features is that they are not being properly risk assessed or designed with safety considerations as a priority, and they are being introduced on top of existing content moderation systems designed for different content formats (or product features). These older processes have been shown time and time again to be inconsistent and ineffective, even with text and images alone. The recent focus from many social media platforms on rapidly developing short-form video features is a visceral example of prioritizing market demands over product safety.

As short-form video continues to keep users engaged on social media, it is critical for tech platforms profiting from the consumption of this content to ensure effective and consistent moderation. This means applying tried-and-tested approaches consistently and developing stronger policies where existing policies fail to deal with newer features, as well as being more transparent about the kinds of resources (or lack thereof) that go into video moderation.

There are many types of harmful content that platforms should expect to grapple with on their short-term video products. Already, YouTube Shorts have been found to recommend transphobic and other hateful content to numerous users who were not explicitly seeking it out. ISD has also previously identified how the YouTube Shorts appears to be more aggressive, pushing its users to extreme content faster than the rest of the platform.

Currently, one of the most pressing types of harmful content for the US market is election disinformation. This Dispatch analyzes the election disinformation policies of mainstream social media companies (YouTube, Meta, and TikTok) in the weeks leading up to November 8, and highlights how platforms failed to prepare for short-form videos as a vector for election disinformation.

Election disinformation policies are in place for the midterms – but are they working?

YouTube, Meta, and TikTok have all geared up for the 2022 US midterm elections by updating their approaches to combatting election-related disinformation and highlighting their election-specific content moderation rules (i.e., Community Guidelines or Standards).

On September 1, YouTube announced its algorithm would “prominently [recommend] content coming from authoritative national and local news sources” when users search for midterms content. The platform also said it would show a “variety of informational panels in English and Spanish” to give more context to searches related to midterms – whether it be voting-related, candidate-related, or general election news. In preparation for Election Day, the platform also said it would provide updated results when they start to roll out. This announcement also refers to the platform’s elections misinformation policies which go into more detail on the content allowed related to elections, including past US Presidential elections. These policies also apply to YouTube Shorts.

Meta made a similar announcement on August 16 with an update on how the company would handle the midterms across its’ platforms. Meta announced its approach to the midterms would be “consistent with the policies and safeguards” it had during the 2020 election. The company added English and Spanish informational panels “deployed” in a “targeted and strategic way.”

The announcement also refers to Meta’s misinformation policy, which includes a section on “voter or census interference.” Instagram’s policy does not remove false or misleading content including claims that have been found false by fact-checkers – the platform instead demotes them from the “Explore” feed and “Reels” tab.

TikTok announced its “commitment to election integrity” ahead of the midterms on August 17, claiming it would “provide access to authoritative information” and roll out an “Elections Center” with election content and resources in “more than 45 languages.” The platform also plans to update users with election results on Election Day. TikTok’s announcement said it would label videos and hashtags mentioning the election and linked to its misinformation policies, which tells users to not post content that “misleads community members about elections.”

But these policies may not be enough. Experts say Meta’s strategy for combatting election disinformation ahead of the midterms is not doing enough to counter the spread of narratives that, for example, push the “Big Lie.” Other research has shown that TikTok is not taking a comprehensive enough approach to political advertising ahead of the midterms.

The research in this Dispatch shows that content containing election disinformation is easy to find on YouTube Shorts, Instagram Reels, and TikTok, suggesting social media companies have underinvested in moderation of short-form video content.

Below, ISD analysts highlight examples of hashtags and videos identified on YouTube Shorts, Instagram Reels, and TikTok that spread misleading claims about elections (whether the 2020 Presidential or the upcoming midterms) and push common election fraud narratives (more of which are highlighted in ISD’s previous Dispatch); and how platforms are seemingly failing to moderate short-form video content.

YouTube Shorts

ISD analysts identified numerous YouTube Shorts that contained false or misleading claims about elections using a combination of keyword searches and qualitative analysis. The Shorts identified include claims that the 2020 election was stolen and other election conspiracy theories. These videos remain accessible as of November 1 2022 and do not contain information labels, despite violating YouTube’s policy on content that undermines election integrity.

A Short published by MSNBC features Donald Trump saying the “corrupt media” never shows the true size of his rallies and obfuscated the size of the crowd at the January 6 attack on the US Capitol. Trump claims in the video that January 6 was “the biggest crowd he’s ever seen,” and that individuals were there to “protest a corrupt and rigged election.” No label or information debunking these claims is provided by YouTube or by the MSNBC channel, which shared the video without any context.

A general search for Shorts including the term #2000mules on YouTube also does not provide the users with an information label. As detailed below, the same is true for Instagram Reels, however, TikTok appears to have prohibited search results under the hashtag. 2000 Mules is a conspiracy film produced by Dinesh D’Souza and election-denying group True the Vote that has been a primary driver of election conspiracy theories since its release in May 2022. 2000 Mules claims to prove that ballot “mules” engaged in ballot trafficking that swung the 2020 presidential election in favor of Joe Biden. While YouTube’s search results did promote credible information regarding 2000 Mules, including news sources debunking its claims, ISD analysts identified eight Shorts that promote or feature claims from the film, two of which include links to the full feature-length film. 2000 Mules makes numerous false claims about the 2020 elections that violate YouTube policy. These Shorts were identified with relative ease, demonstrating that they remain easily accessible.

Examples of 2000 Mules Shorts content include a Short featuring a trailer for the film, which claims the full film is available on the user’s channel. The Short remains readily accessible without any information label or additional context. A BitChute link to the full film was posted in a comment on the Short; other users commented that they used the BitChute link to access the film. BitChute is a video-based platform flagged by the ADL for hosting hate-based content. At least one other Short included the BitChute link to the full film, this time as text displayed within the video. Others made explicit claims that the 2020 election was “stolen,” including urging viewers to “open your mind to see the #widespread #2020 #Presidential election fraud.” An additional Short promoting the film shows footage of individual “ballot mules” allegedly “stuffing” ballot drop boxes with multiple ballots. Claims that “mules” stuffed ballot boxes with fraudulent votes were central to 2000 Mules. While the faces of individuals featured are blurred, similar clips from 2000 Mules have been shared across social media and have reportedly led to calls for “mules” to be identified, arrested, and even killed.

No additional context or information labels were applied to any of the above Shorts, despite their explicit promotion of false and misleading claims of election fraud. Their real-world impact cannot be underestimated. “Stop the Steal” rhetoric was integral to the January 6 attack on the US Capitol and the myriad baseless election conspiracies that have festered since. 2000 Mules played a considerable role in moving election conspiracy theories from fringe to mainstream spaces, spurring calls against measures designed to expand voting accessibility including mail-in ballots and ballot drop boxes. Incidents of potential voter intimidation have since been observed, recently including armed individuals in tactical gear “watching” a drop box in Arizona.

Figures 1 and 2: Screenshots of YouTube Shorts that mention or promote conspiracy film 2000 Mules.

Figure 3: A YouTube Short posted by MSNBC in which Donald Trump claims the 2020 US election was “rigged and stolen”.

Instagram Reels

Meta’s misinformation policy claims that videos with false or misleading content about elections are removed from the “Explore” and “Reels” feeds, but ISD found that Reels containing false claims are readily available through searches and hashtags. Search terms about election conspiracies – such as #2000mules and #deadvoters – are not restricted and do not have any fact-checking labels. In fact, throughout the process of this investigation, analysts did not observe any fact-checking of election disinformation on Instagram Reels.

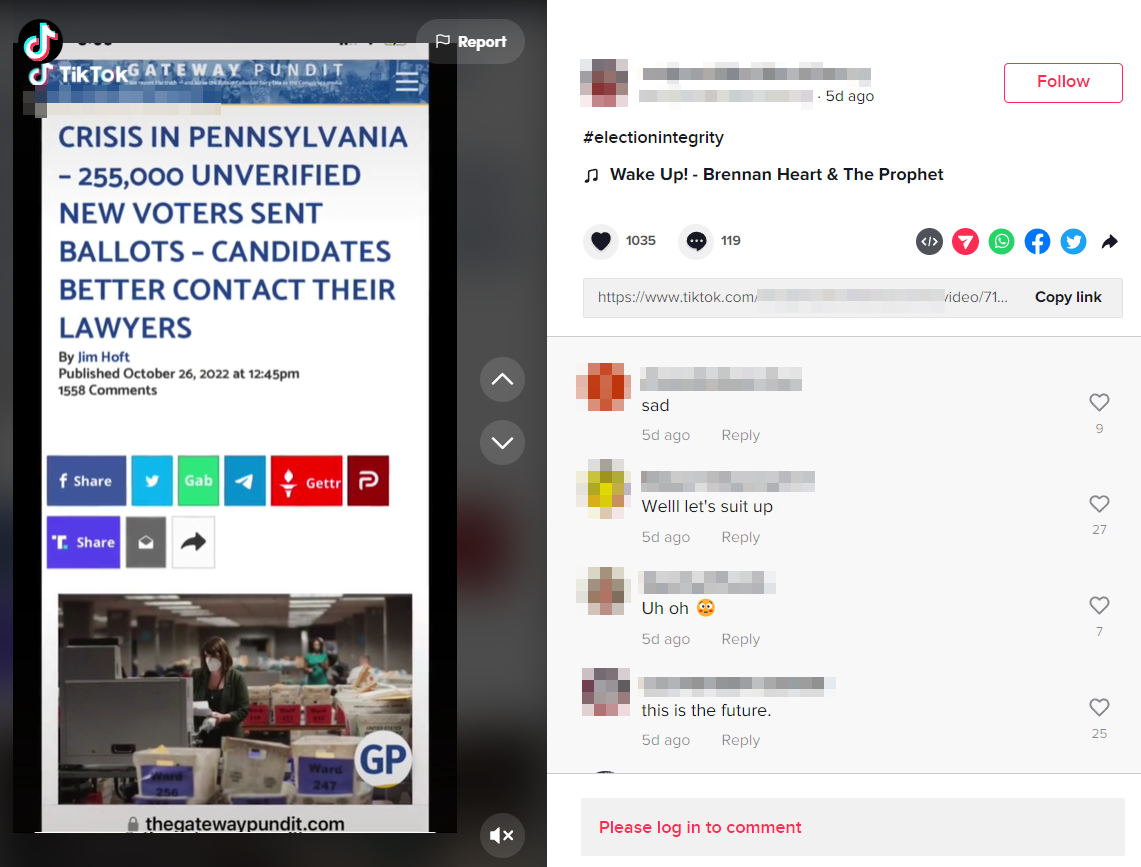

Under the hashtag #electionintegrity, which has 19.6K posts (including Reels and photos). ISD observed multiple Reels with false statements about elections. These videos included claims that mail-in ballots are used to manipulate election results and that Democrats are using dead voters to swing elections in their favor. One of the first 25 Reels associated with the hashtag is from a former Michigan gubernatorial candidate, featuring a zombie with multiple “I voted” stickers and the caption “why is it that dead people always vote Democrat?” None of the first 100 videos returned by the #electionintegrity hashtag were given information or fact-check labels for election disinformation. Some of the posts did have COVID-19 information labels.

Figures 4 and 5: (top) a Reel amplifying the “dead voters” conspiracy; (bottom) a Reel promoting conspiracy theories about mail-in ballows.

The hashtag #2000mules has 14.6K posts, #stolenelection has 22.3K posts, and #riggedelection has 24.1K posts. Most videos under these hashtags contain disinformation about the 2020 presidential election and 2022 midterm elections. ISD reviewed the top posts under these hashtags and did not note any election information labels.

In addition to these videos appearing in hashtag searches, there are also Instagram Reel creators with large audiences who are taking to the feature to spread election conspiracy content. One frequent poster of election-denying claims on Instagram is the media watchdog group, Media Research Center (139K followers). Their top pinned post, which has been viewed 39.3K times, is a Reel compilation of instances they claim prove election fraud. The post contains a link to the account’s fundraiser to “expose voter fraud,” which is also linked in the account’s bio.

Many of the top videos that contain election-denying hashtags are clips from right-wing and fringe media, including Fox News, One America News, and InfoWars. These clips frequently feature election-denying actors such as True the Vote and former Newsmax host Grant Stinchfield, who typically amplify conspiracy theories about elections.

TikTok

By conducting manual searches on TikTok, analysts identified hashtags and unlabeled videos spreading popular election disinformation narratives, including but not limited to: videos about the 2000 Mules documentary, videos pushing various conspiracies about the midterms, and videos claiming the election was stolen. While a small proportion of these videos did have information labels leading users to more information about the midterms, most remained unlabeled.

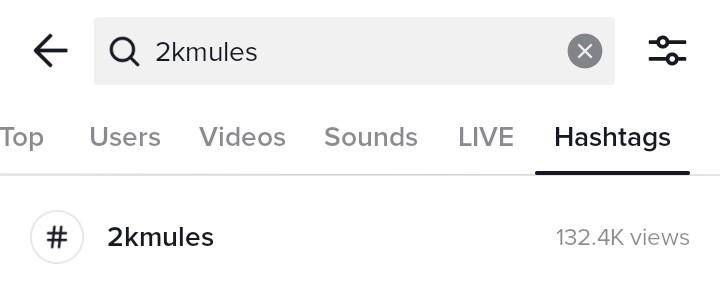

Unlike Instagram, TikTok has prohibited search results for the hashtag #2000mules on its platform, including slight variations (such as #200mules or even #ballotmules). As such, the search returns a message stating “this phrase may be associated with behavior or content that violates our guidelines”. However, users spreading false claims made by the documentary have found a way around this moderation by using the hashtag #2kmules instead. The videos under #2kmules have garnered a total of 132.4k views. Some of these videos have a label that read, “Get info on the U.S. midterm elections,” but most do not.

Figure 6: the hashtag 2kmules on TikTok, which has garnered 132,4k views.

Additionally, the audio “2000 Mules” by Forgiato Blow, a rap song with audio clips from the documentary and lyrics that mention votes getting stolen, has been used in 47 videos – all of which highlight claims made by the documentary or urge users to watch it. Some accounts have also uploaded portions of the documentary.

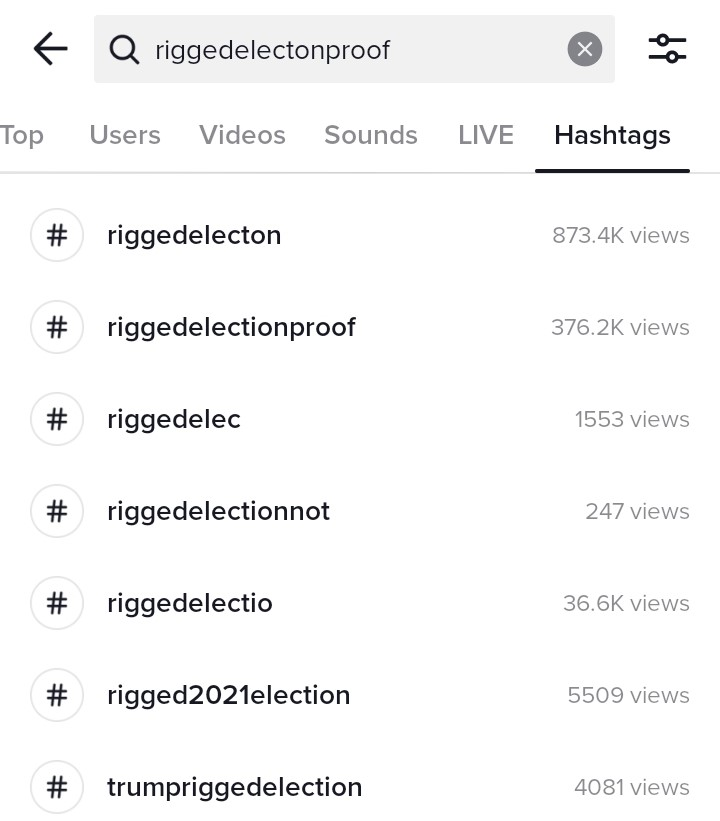

TikTok also allows the hashtag #electionstolen (314.6k views) and #riggedelectionproof (368.8k views). While scrolling through the videos, analysts identified some posted in recent months (including after TikTok’s August 17 announcement) and others that were uploaded back in 2020. Most videos amplify claims that the 2020 presidential election was stolen and rigged. Another hashtag, #stoptheststeal (a slight alteration presumably designed to evade TikTok’s ban of #stopthesteal), has 461.0k views.

Figure 7: various misspellings of “rigged election,” including the correct spelling of “rigged election proof” found on TikTok.

The hashtag #electionintegrity, which does not (on its own) push any election disinformation narratives, has 13.2 million views. However, the top videos using this hashtag featured public figures and candidates that have been known to spread claims about election fraud, such as Kari Lake and Kristina Karamo. A review of the first 50 videos returned for this hashtag search, analysts identified that only 10 had received the “get info on the U.S. midterm elections” label, despite most of them referring explicitly to the midterms.

Figure 8: A screenshot of a TikTok under the hashtag #electionintegrity spreading claims about midterms in Pennsylvania, unlabelled by the platform.

Conclusion

The examples included in this Dispatch represent a fraction of the easily-accessible short-form video content that violates election disinformation policies across YouTube, Instagram, and TikTok. ISD analysts identified problematic content with relative ease, indicating two things: firstly, that newer platform features and functionalities, such as short-form videos, are being rolled out without comprehensive risk assessment and user safety considerations; and secondly, existing platform moderation policies continue to fail despite platform promises.

ISD conducted this research using entirely qualitative and small-scale methods, as opposed to the often complex big data collection or machine-learning approaches researchers use to investigate harmful content online. In this way, analysts were able to replicate the user journeys of users on each of these platforms who may be interested in election-related issues and be searching for related content in the weeks before the midterms. As demonstrated, disinformation and election conspiracy claims are readily available to these users.

Inconsistent and insufficient moderation policies are currently assisting bad actors seeking to undermine voter faith in US elections and worsening political tension surrounding Election Day. As 2022 midterm elections draw near and the 2024 presidential election loom large, platforms that are committed to upholding their promises to combat election disinformation cannot allow these shortcomings to continue. Short-form video content is lucrative for platforms and creators alike and should not be overlooked as a potential source of claims that undermine election integrity, spread false information about voting and elections, or even supress votes. These platforms have the resources to develop better policies and moderation systems. Already, Meta predicts a $1 billion revenue run rate from Instagram Reels. YouTube meanwhile announced that Shorts have “amassed more than 30 billion daily views,” leading the platform to create a $100 million YouTube Shorts fund and indicating it believes short-form video is well worth investing in.

It is possible for platforms to capitalize on the popularity of short-form video content in ways that promote factual information on elections. This includes adding information labels and context to videos that feature hashtags known to be associated with election disinformation, and better tuning recommendation systems to promote credible content and demote content that includes elections falsehoods. Most importantly, platforms must consistently apply their existing election disinformation policies across short-form videos, otherwise the same problems platforms have faced – and have had substantial amounts of time to deal with – will continue and worsen in the future.