Anti-Vaccine Content is Still Clocking Up Millions of Views on YouTube Despite Major Policy Shift

9th November 2021

By Aoife Gallagher

In May 2020, YouTube began to crack down on COVID-19 misinformation, including anti-vaccine content. Over a year later, in September 2021, the platform announced policies regarding all anti-vaccine information, not just COVID-19 related content.

Despite this announcement, videos and accounts promoting widely debunked anti-vaccine tropes remain on the platform, clocking up millions of views. Some of the videos have been on the platform for more than 15 years, predating misinformation about COVID-19 vaccines, and are hosted on channels known to be influential in the wider anti-vaccine movement. ISD researchers investigated.

_________________________________________________________________________

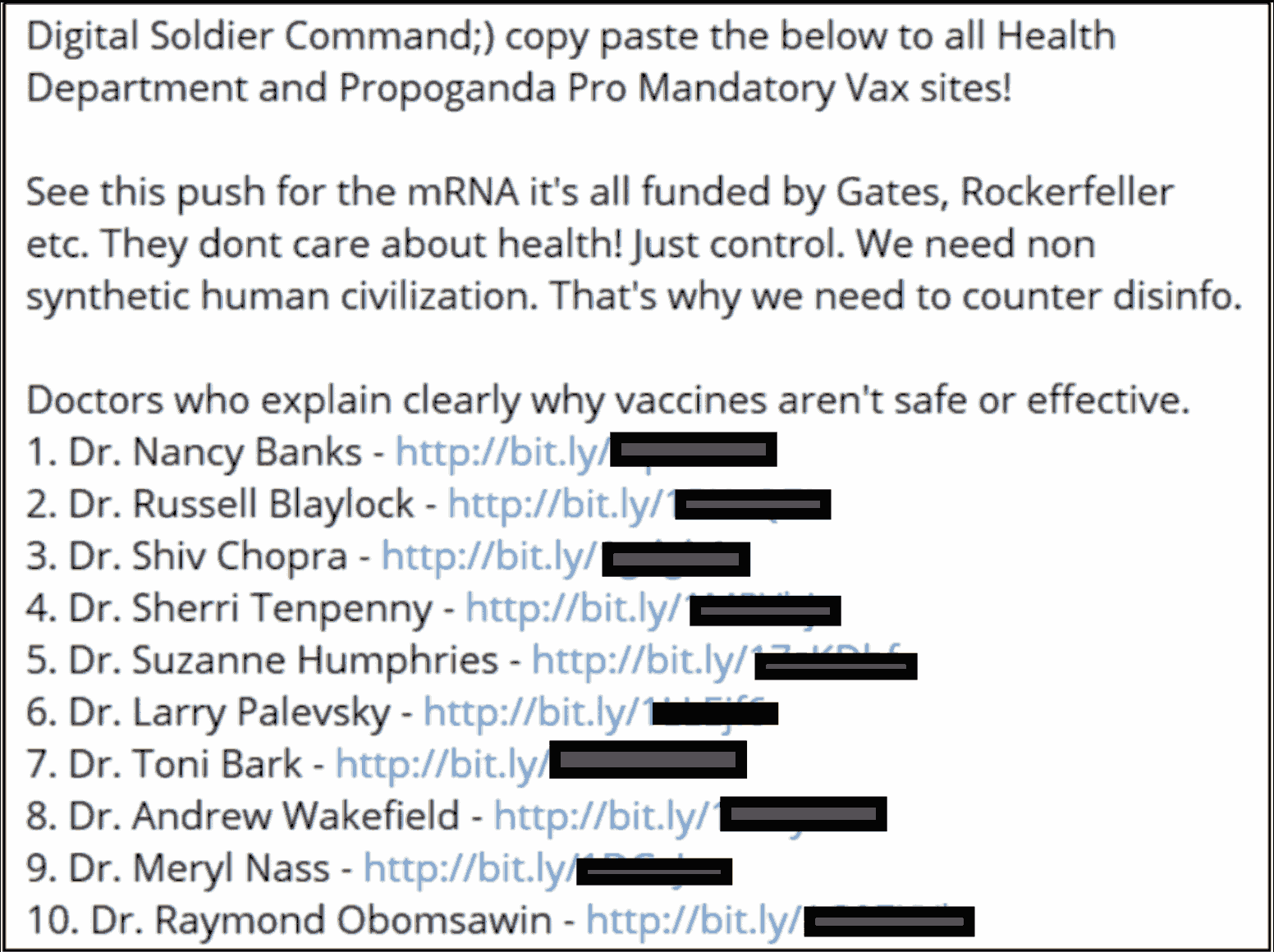

A repository of links to anti-vaccine content has been widely shared on Telegram throughout 2021, initially appearing in QAnon-related channels in September 2020 with instructions to “copy paste the below to all Health Department and Pro Propaganda Vax sites”. The message also claimed that the “push for the mRNA [is] all funded by Gates, Rockerfeller [sic] etc.” The list was then shared within wider COVID-19 and anti-vaccine conspiratorial communities on Telegram from January 2021 and continued to be re-shared throughout the year as the vaccine rollout progressed.

The list contained 62 individual bit.ly links corresponding to videos, articles and documentaries in which doctors discuss the supposed dangers of vaccines. 45 of the 62 links led to YouTube videos, of which 26 were still active at the time of writing. These 26 active YouTube videos have clocked up a combined total of over 4.6 million views, with the majority posted on the platform between 2006 and 2014.

Figure 1: A screenshot of the repository analysed by ISD shared in a QAnon Telegram channel.

None of the videos analysed discussed COVID-19 vaccines, instead focusing on false claims about the measles, mumps and rubella (MMR), human papillomavirus (HPV) and flu vaccines. Of these 26 videos, 17 clearly promote the widely debunked claim that vaccines are linked to autism, while others insist that Gardasil (the HPV vaccine) and the flu vaccine cause death. All three of these claims are listed as examples of prohibited content in YouTube’s vaccine misinformation policy.

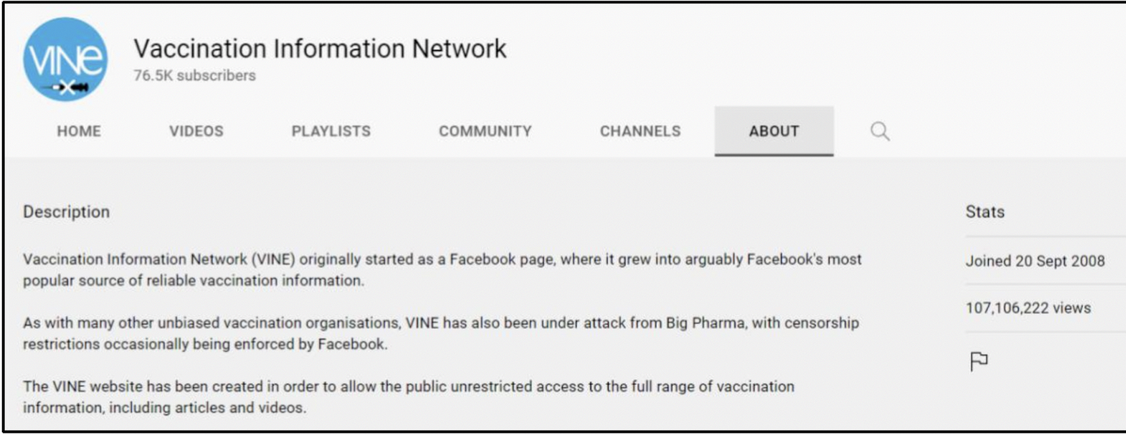

Some of the accounts hosting these videos are well known anti-vaccine influencers, with thousands of subscribers and millions of views. Examples include the Vaccine Information Network (VINE), a group that has spread false claims about the MMR vaccine, 5G and the Zika virus. The YouTube account, set up in September 2008, hosts just 12 videos that have been viewed over 107 million times.

Figure 2: Channel of the Vaccination Information Network with over 107 million views.

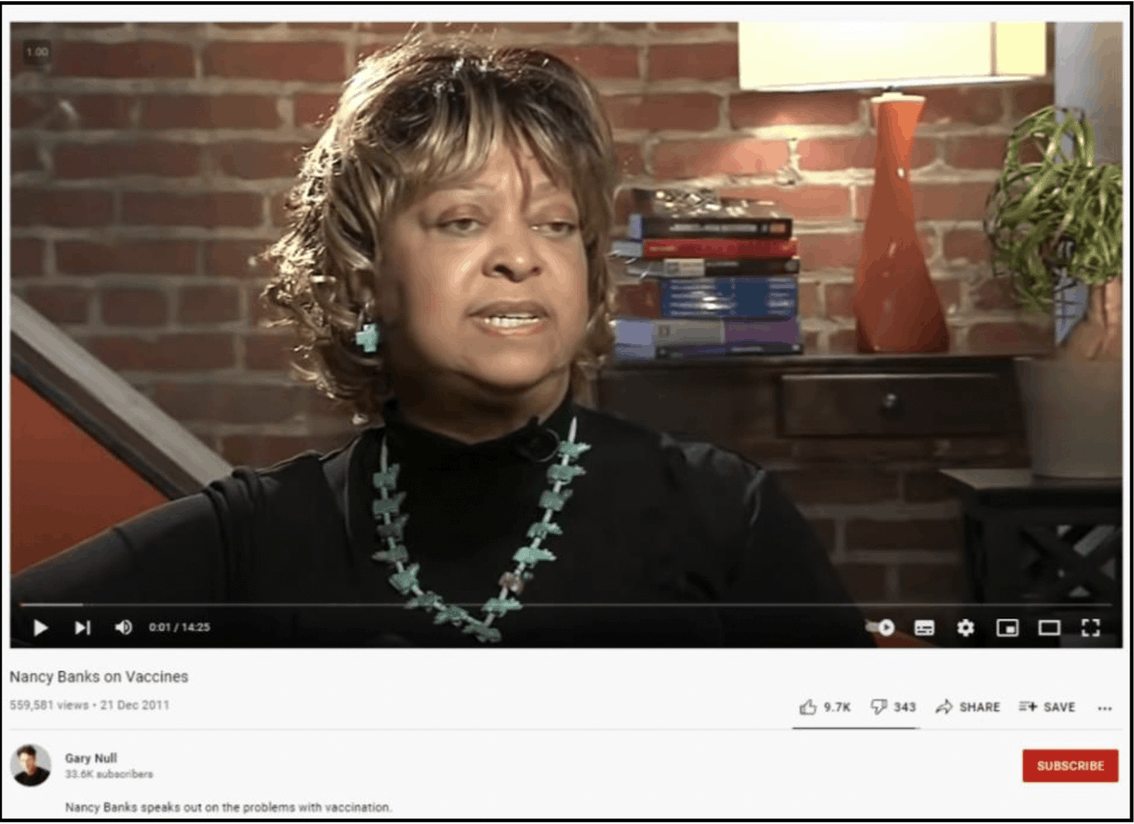

Another example is the account of Gary Null, a talk radio host who has spread various pseudoscientific theories for years, including denying the dangers of HIV/AIDS and rejecting scientific consensus around vaccines. Two out of the 26 videos found by ISD are hosted on Null’s account and have clocked up over 610,000 views. His account, which was set up in April 2008, has 33.6k subscribers and over 4 million views.

Figure 3: Video hosted by Gary Null’s account uploaded in 2011 with over 550,000 views.

The names and descriptions of the videos also raise questions as to the efficacy of the technology used by YouTube to detect anti-vaccine content on its platform, with some containing obvious examples of the egregious nature of the claims in the videos. For example, one video is titled ‘Vaccines-The True Weapons Of Mass Destruction’ while another is called ‘Autism made in the U.S.A Vaccines, Heavy Metals, and Toxins are causing the epidemic of autism’.

There is also evidence of ad revenue being generated from some of the content, with five out of the 26 videos found by ISD running ads. These had accumulated over 1.2 million views at the time of writing.

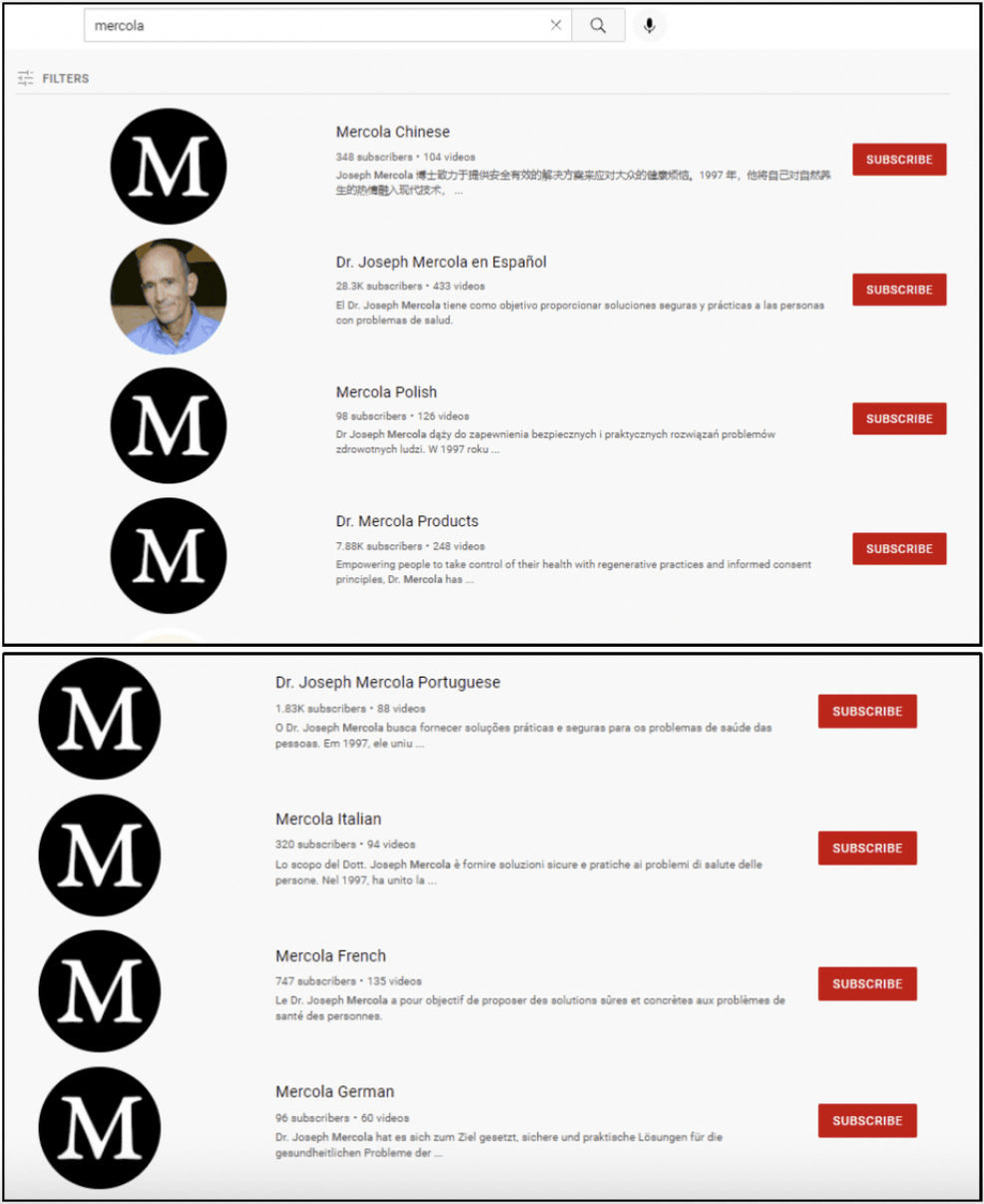

When YouTube announced the changes to their policies, they said that well known anti-vaccine ‘superspreaders’ such as Dr Joseph Mercola and Robert F. Kennedy Jr would be removed from the platform, but a simple search for channels named ‘Mercola’ surfaced 11 channels hosting Dr Mercola videos, mostly in languages other than English.

Figure 4: Results for channels linked to Dr Joseph Mercola.

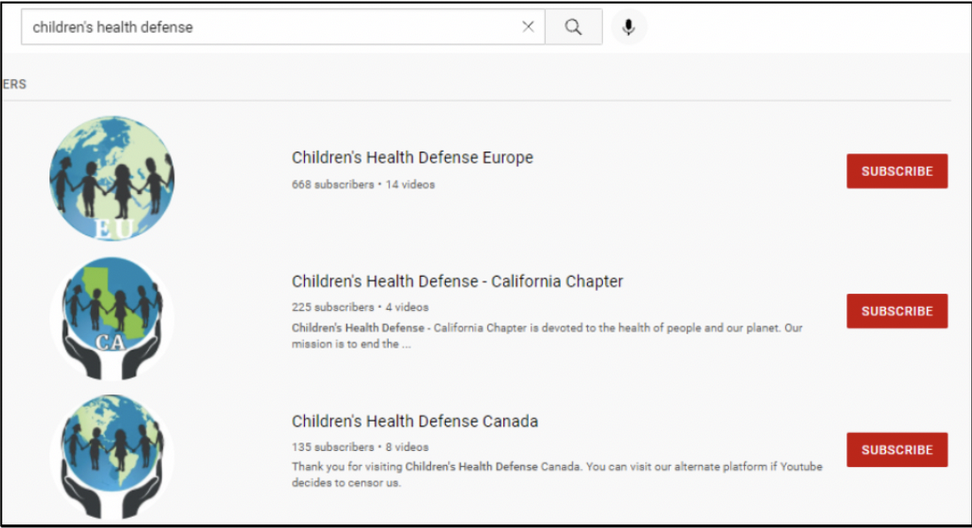

Similarly, a search for channels called ‘Children’s Health Defense’, Robert F Kennedy Jr’s anti-vaccine website, surfaced three accounts hosting videos from the influencer; one dedicated to Europe, another to California and a third to Canada.

Figure 5: Channels linked to Robert F. Kennedy’s anti-vaccine website.

The results of this relatively simple analysis highlight major gaps in the enforcement of vaccine misinformation policies by YouTube. It shows that although the platform promised to remove anti-vaccine ‘superspreaders’, many still remain on the platform and are hiding in plain sight. There is certainly scope for further research on the platform’s enforcement to determine the extent of these policy failures.

ISD’s previous work on COVID-19 mis- and disinformation includes Ill Advice: A Case Study in Facebook’s Failure to Tackle COVID-19 Disinformation, and ‘Climate Lockdown’ and the Culture Wars: How COVID-19 sparked a new narrative against climate action.

Aoife Gallagher an Analyst on ISD’s Digital Research Unit, focusing on the intersection between far-right extremism, disinformation and conspiracy theories and using a mixture of data analysis, open source intelligence and investigative techniques to understand the online ecosystem where these ideas flourish and spread. Previously, Aoife was a journalist with the online news agency, Storyful and has completed an MA in Journalism from TU Dublin.